At what level should you automate tests? Unit test only? GUI-driven tests? Something else? In this blog post, I reflect on a recent experience with both pain and value, and how I am coming to imagine a portfolio approach to test automation.

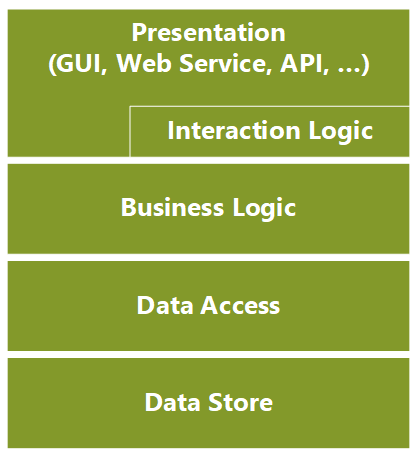

Consider an example application or system (such as a web application or a mobile application). When viewed in layers, it might look like this:

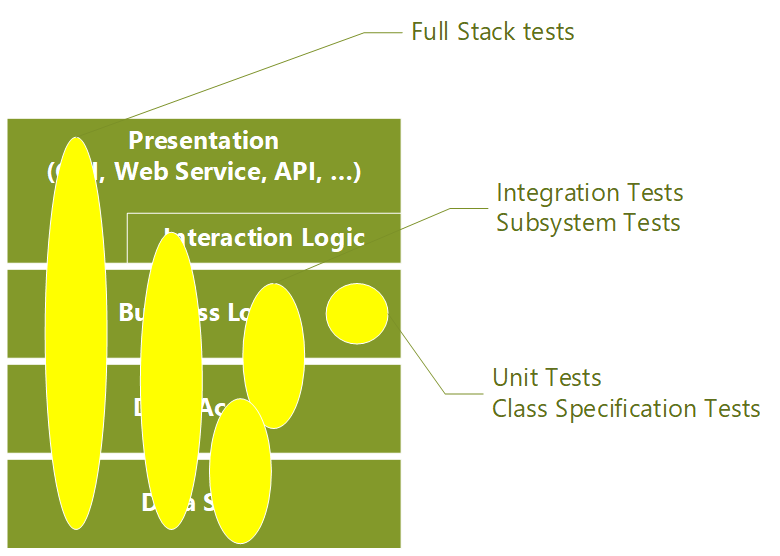

When I write an automated test for such a system, there are different levels of the system that might be tested.

I think of these in the following terms:

- “Unit Tests” and “Class Specification Tests” – these test individual modules or classes

- “Integration Tests” or “Subsystem Tests” – these test multiple classes or modules working together, but not the whole system

- “Full Stack Tests” – these test the whole system or application, perhaps through the GUI or APIs

As teams I’ve worked with have implemented different kinds of test automation, I’ve noticed the following patterns in these different kinds of tests:

Unit Tests and Class Specification Tests

Advantages |

Disadvantages |

|

|

Integration Tests and Subsystem Tests

Advantages |

Disadvantages |

|

|

Full Stack Tests

Advantages |

Disadvantages |

|

|

What’s to be done?

I’ve noticed that when teams focus on only one of these kinds of testing, we get both the advantages – and disadvantages – of that kind of testing. For example, a team that focuses only on the Unit/Class level tests can create reusable, modular classes, but runs into unexpected system behavior. A team that focuses on GUI-level tests creates few integration bugs but finds that the suite of tests become less and less useful over time due to fragility and the length of time it takes to run the suite.

While I don’t believe there is “one right answer,” an approach I’m coming to is thinking of the overall test suite (including manual tests, which I have not discussed), as a portfolio. As such, the question becomes “what mix of tests will provide us with the best value for our investment?” Factors in determining a test mix might involve the following questions:

- What kinds of issues create the most adverse impacts for us? How could we mitigate those first?

- If we scale up a certain kind of testing (do more if it), how will that impact us? How will it benefit us?

- What mix of skills and experience do we have? What investments would we need to make to extend our current capabilities?

- What technical capabilities (for test) exist for our platforms? What would we need to invest to extend those?

- What’s needed to enable test-first development approaches (TDD and BDD)?

- How can we get fast feedback?

For our current environment, my favorite mix of tests is:

- Cucumber-style integration tests that run most of the application stack but bypass the GUI – these allow BDD in a desktop environment, even though our targets are web app (host side) and Android (device side).

- Class specification tests – these allow fast BDD/TDD at the class level.

- A small number of full stack tests that help ensure the overall application integration and user flows are in good shape.

Resources:

- For a good introduction to Behavior-Driven Development, read The RSpec Book. While the book is specifically about Ruby, Cucumber and RSpec, the concepts port easily into other frameworks.

- For BDD with C#, we use SpecFlow.

Originally published October 5th, 2017 on the Innovative Software Engineering blog. Republished with permission.

Update: some time after writing this article, I ran across an article on The Practical Test Pyramid – I often link people to this for further reading.